Project Description

The project is going to be about procedurally content generation, making use of the content selection algorithms to create the soundtrack in video games. In our case both the algorithm and the music was made by myself. The project was made using Unity 3D and FMod Studio.

The project will be about a battle with a monster, adapting the music soundtrack depending on various states such as, health player, health boss, attacks used, player/boss states (frozen, etc..), battle arena, etc…

The project can be found on the following Gitlab Repository: https://gitlab.com/Robinaite/gameai-coursework-project

Below you can find some parts of the report created on this project explaining choices made:

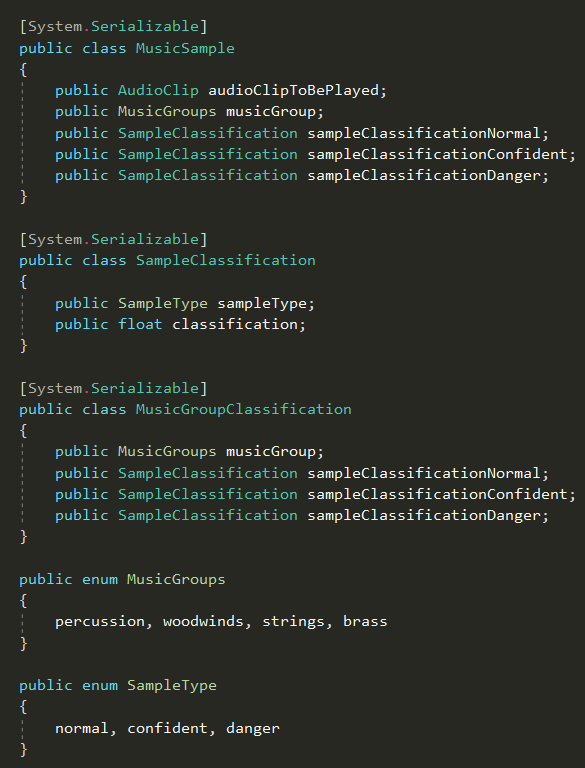

We’ll need to decide on what parts of the music will be dynamic, after some thought the following music groups will be used:

-

- Percussion

- Woodwinds

- Strings

- Brass

Each of these music groups will have various samples, which will be swapped based on what is happening in-game.

Each sample, after the creation will need to be classified. The classification works as follows, there are 3 types of samples, normal, confident, and danger, all samples will be classified with values between 0-1 on how much they are the respective type. For example, a really dangerous sounding percussion part will have the following classification: normal – 0, confident – 0, danger – 1, however a sound which is really dangerous but also gives you a bit of confidence will be: normal – 0, confident – 0.3, danger – 1.

In the image below, you can see what each music sample and music groups need to function.

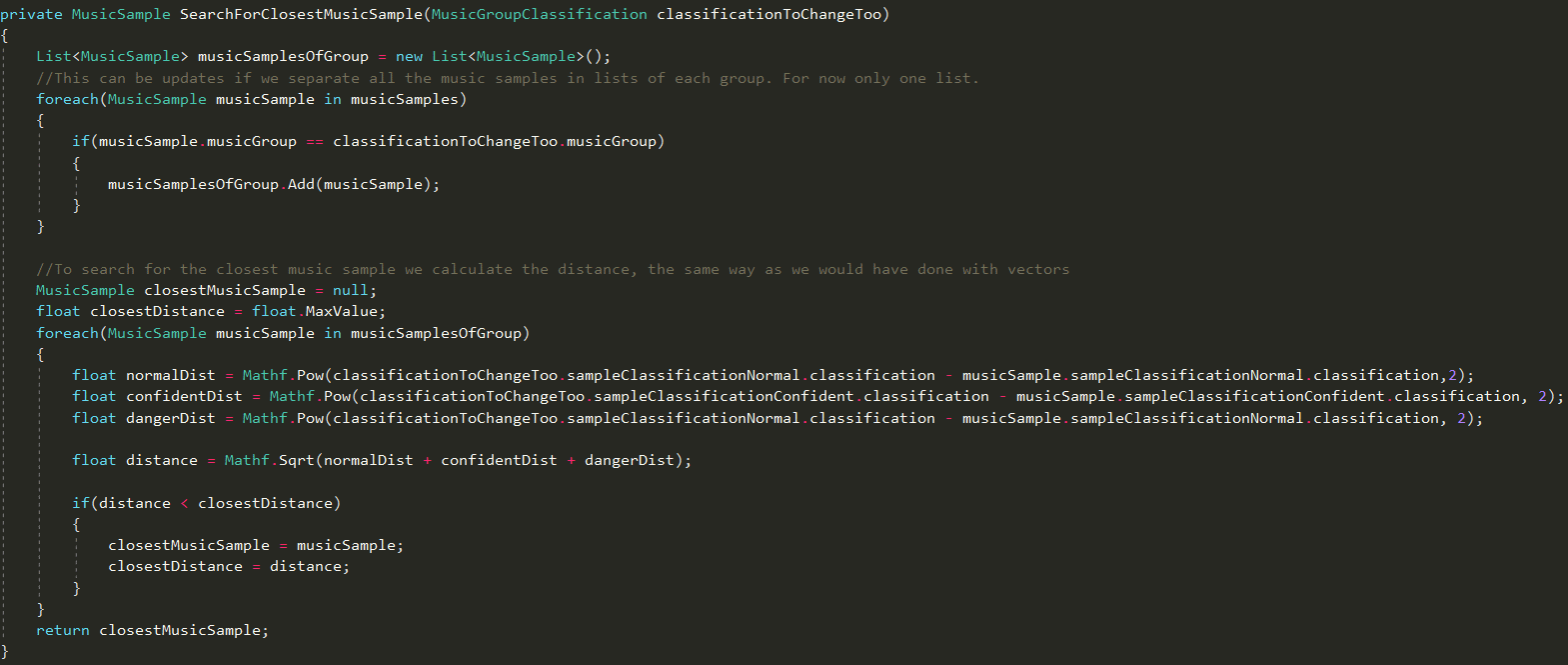

The selection process of the samples will work as followed:

Based on the combinations of various in-game parameters, the game will decide what kind of classification will be appropriate for the moment, classification being that how much each type of sample is needed, like, 0.5 – normal, 0.8 – confidence, 0.1 – danger. After deciding the classification, the game will search for the samples that have the closest classifications to the one for the moment.

In the image below, we can see how the search algorithm works. We start with getting all samples of the respective music group that possibly will change. After which we will loop the respective samples. For each sample, we will calculate the distance for each of its classification type. The distance is calculated as follows:

distance= (x1−x2)^2+ (y1−y2)^2+(z1−z2)^2

Where x, y and z are the normal, confident and danger classifications respectively. 1 is the classification which we will change too and 2 being the music sample classification of respective type.

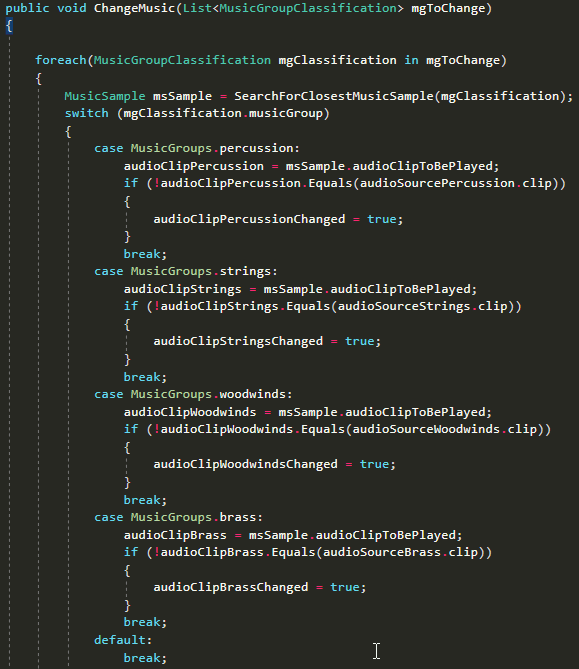

The method below is the public method that will be used across the game to make the changes work.

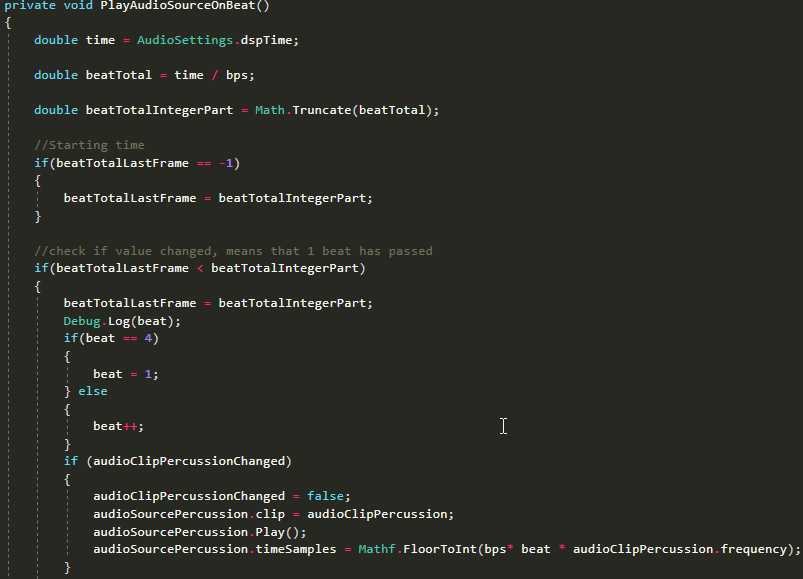

To account for music synchronization issues the following method was created:

In this method we set it up so that the new audio clip only gets played on one of the respective beats of the music, where themselves will also start. First, we transform the current dspTime that has passed in total beats that has passed. BPS is Beats Per Seconds, which is calculated based on a given BPM (Beats Per Minute). We then check if the integer part of the total beats has changed, and if that is the case, it means we’re onto our next beat. In this case the method only has 4 beats per bar(4/4 in music standards).

Lastly, we check if the audio clip has changed, and if so, change the audio clip and play it immediately. Then we’ll set the new audio clip to start at the respective beat, which is calculated in PCM Samples, due to it being more correct with audio, then when using time in seconds.

Note: The other music groups are not displayed in the image above, but the process works the same as the if starting at audioClipPercussionChanged.

The classification is done for each music group separately, this is due to each music group be more related to a specific event in-game, for example, health will be mostly associated to drums/percussion. However, there’s a possibility that multiple music groups get effected at the same time, whenever this happens, and in case multiple classifications are done to the same music group, there will be a process of averaging out the classification, or through any other selection algorithm.

Pros & Cons of using Unity’s Audio System in our algorithm

The use of Unity’s audio system gives us some advantages and disadvantages. Starting with the advantages:

-

- It’s easier to setup, due to being incorporated by default into the Unity.

- Easier to iterate on.

- No 3rd party tools needed.

The disadvantages are:

-

- Synchronization of music is outside the scope of unity’s audio system.

- No possibility to change music clips with a fade-in/out without creating the fade-in/out system ourselves.

- More work, if algorithm gets more complex.

Changing from Unity’s Audio System to FMOD audio System

This change was done to solve a couple of issues, mostly to make it easier to synchronize music samples, and the respective fade-in/fade-out.

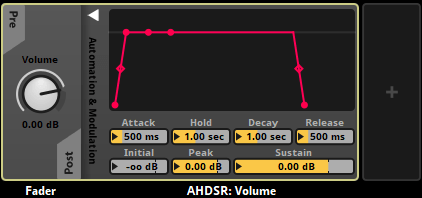

To add better fade-in/fade-out capabilities, we’ve done two changes. In FMOD we’ve added modulation on the event volume. AS which gives us the configurations needed to set how the event fades-in and fades out, when starting/stopping.

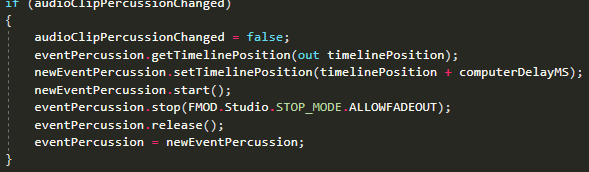

In our algorithm we changed how the new music event started:

Now, due to FMOD’s capabilities, we’re able to get the current timeline position and paste it onto the new event so that it continues where it was left off. We’ve also changed the order, first we start the new event, and only after we stop the current one, to get a smoother cross-fade.

I’ve also added a computer Delay to the set timeline position, this is needed to fine-tune the position of the timeline, because the change of events can cause some delays depending on computer hardware. This is, for example, also done in most rhythm games.

Improvements & Efficiency

The algorithm created is efficient. Its most time consuming is O(n) (Big O Notation), because it needs to loop through all the music samples.

For example, to calculate the music sample to be selected, we’ve initially put in vector calculation by default from unity 3d. However, we’ve optimized it, because we don’t need the concrete distance, only the relative, so we are able to remove the square root needed in the end. As square root is a time-consuming mathematical function, times the number of samples to search.

We’ve also considered the FMOD event creations, whenever they are done, we release the event, so it can be grabbed by the Garbage Collector, this releases the occupied memory. Depending on how many times the Garbage Collector runes, there will be around 4-8 events at the same time in memory.

Unfortunately, I’m not able to put the results against other algorithms, due to the respective topic being quite on a task by task basis, so there are no algorithms with similar use to this one available to be compared too.

There are however some improvements that can be made, nonetheless.

Instead of having to loop twice, one after another, with the first being searching for the right music sample from the correct categories, and the second looping through the music samples found previously, we could separate the list in all the music samples categories, so we wouldn’t need an extra O(n) loop.

Another improvement can be, instead of making use of Unity’s pow, we could just multiply the sum. We can do this because we don’t need 100% accuracy on the distance.

The project can be found on the following Gitlab Repository: https://gitlab.com/Robinaite/gameai-coursework-project